What can you do with an ISA specification?

ISA specifications describe the behaviour of a processor: the instructions, memory protection, the privilege mechanisms, debug mechanisms, etc. The traditional form of an ISA specification is as a paper document but, as ISAs have grown, this has become unwieldy. More importantly though, there are more and more potential uses for machine readable, mechanized, executable ISA specifications.

- Documentation

- Architecture design

- Verifying processor pipelines

- Compilers

- Software security

- Verifying software

Documentation

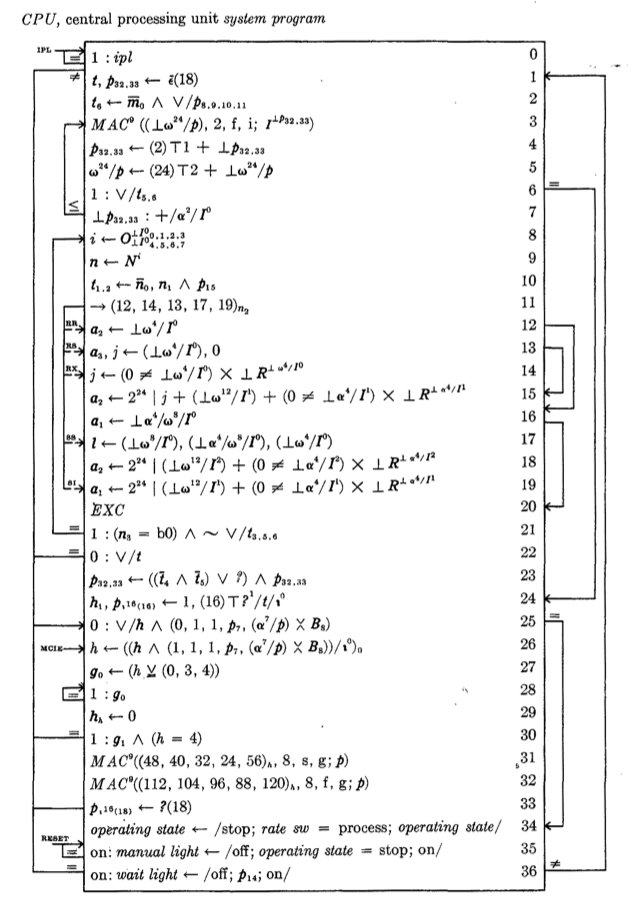

The most obvious purpose of an ISA specification is as documentation. An early formal notation is Bell and Newell’s “Instruction Set Processor” (ISP) notation [bell:afips:1970] that was used to write specifications for 14 systems including the PDP-8, PDP-11 and CDC 6600. ISP followed in the Algol language tradition and is similar to the less formal pseudocode notations typically used in ISA definitions in the present day. ISP was used during design of the PDP-11 and included in the manufacturer’s processor handbook [pdp11:book:1973].

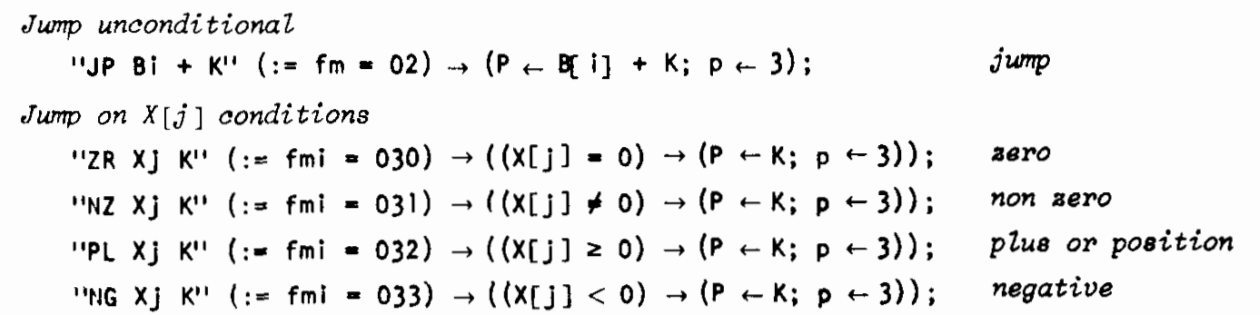

Here is a fragment of a specification of the CDC 6600 [bell:afips:1970] that shows how it compactly describes assembly syntax, instruction encoding and semantics.

An even earlier ISA specification was Falkoff et al.’s use of APL to describe the IBM System/360 [falkoff:ibm:1964]. However, given the novelty and unfamiliarity of APL at the time, it is not clear that the primary goal was documentation.

Architecture design

Although it is common for ISA specifications to be written or updated after the architecture has been designed or extended, a complete executable specification can be a useful aid to architects as they are developing or extending an architecture both by providing a clearer language (than natural language) for expressing their thoughts and by allowing architects to test that the changes behave as intended.

In addition, having the architects themselves write and test the changes to the specification simplifies the process of developing and maintaining ISA specifications and avoids the effort and errors associated with transcribing natural language documents and / or C++ simulators into some specification language.

Generating simulators

Shi’s Simlight simulator[shi:phd:2013, joloboff:dsetta:2015], was based on parsing Arm’s reference manuals, Fox’s MIPS specification [fox:itps:2015] written in L3 has been shown to boot FreeBSD, and my own work within Arm [reid:fmcad:2016] was later shown to be able to boot Linux. An especially interesting approach is the automatic generation of binary translations between architectures [bansal:osdi:2008].

Simulators vary significantly in performance: from around 10kHz (for an unoptimized interpreter modelling full address translation on every instruction fetch) to 500MHz for metatracing simulators like Pydgin [lockhart:ispass:2015].

Testing architecture design

ISA specifications are as prone to bugs as any other software of similar size and complexity. Fonseca et al.’s empirical study of the correctness of formally verified systems found bugs in specifications [fonseca:esc:2017]. So, before an ISA specification can be considered trustworthy, it must be tested or verified against an accurate oracle [barr:tse:2015] (usually hardware).

Different specification development efforts vary significantly in how much testing they perform: from a few 10s of tests per instruction to executing billions of instructions and booting OSes [fox:itp:2010, goel:fmcad:2014, flur:popl:2016, shi:phd:2013, reid:fmcad:2016, armstrong:popl:2019].

Formal verification of a processor against a specification [hunt:jar:1989, fox:ucam:2002, reid:cav:2016] has the desirable side-effect of detecting bugs in the specification and ensuring compatibility.

Automatic generation of test cases

Building a good testsuite is very laborious and error-prone but some of the effort can be avoided by automatically generating test cases. [martignoni:asplos:2012, godefroid:acmq:2012, campbell:fmics:2014].

In my experience though, most commonly used test generation techniques focus on achieving consistent levels of control-coverage (i.e., they focus on control flow graphs) and they are relatively weak at achieving consistent value coverage. In my adaptation of the concolic testcase generation technique described in [martignoni:asplos:2012], I was happy with the tests generated for instructions that set condition flags (e.g., ADD). Unfortunately, for instructions with just one control path such as signed multiply (SMUL), just one test would be generated when even the weakest hand-written testsuite would test for all combinations of positive, zero, and negative operands and would test for various overflow conditions. I feel that we still have more to learn here.

Verifying architecture design

We can use testing and verification to check that a specification matches existing implementations of an ISA. But we hit a chicken-and-egg situation when we want to check extensions to the specification: testsuites and processors are created after the specification is written so they cannot be used to test the specification as it is being written.

The solution used in [reid:oopsla:2017] and [bauereiss:ucam:2021] is to identify and formally verify important properties that the architecture must satisfy if it is to achieve its purpose and is not to break existing properties of the architecture. Often, the most important things to verify are security properties.

Verifying processor pipelines

With processor complexity rising (an inevitable result of both commercial pressures and the end of Moore’s law), formal verification of processors is increasingly important. Some processors that have been formally verified against their ISA specification include FM8502 [hunt:jar:1989], ARM6 [fox:ucam:2002], DLX [beyer:ijsttt:2006], five Arm processors [reid:cav:2016], Y86-64 [bryant:cmu:2018], Silver [loow:pldi:2019], and x86 [goel:spisa:2019, goel:cpp:2020, goel:cav:2021].

Compilers

Compiler generators

Barbacci developed Bell and Newell’s ISP notation into a machine readable notation “Instruction Set Processor Semantics” (ISPS) [barbacci2:computer:1973, barbacci:ieee:1981] that targets compiler-related uses such as the automatic derivation of compiler code generators by Fraser [fraser:sigart:1977] that used ISP specifications of the IBM-360 and PDP-10 and Cattell [cattell:toplas:1980] that used ISPS specifications of the IBM-360, PDP-8, PDP-10, PDP-11, Intel 8080, and Motorola 6800.

This topic seems to have largely died off until instruction selection in SLED [dias:popl:2010].

Discovery, verification and synthesis of peephole optimisations

One part of compilation that is especially well suited to automation is the discovery / generation of peephole optimizations using “superoptimization” [massalin:asplos:1987] (an exhaustive search). For example, Bansal’s superoptimizer [bansal:asplos:2006], Denali [joshi:pldi:2002], and Souper [mukherjee:oopsla:2020]. Where peephole optimizations are discovered and implemented manually, tools like Alive [lopes:pldi:2015] can be used to verify that the optimizations are correct.

Verifying compilers

Some of the earliest uses of formal semantics were for automatic reasoning about programs such as Samet’s development of Translation Validation [samet:ieeetse:1977, samet:phd:1975] (later reinvented and refined by Pnuelli [pnueli:tacas:1998] and Necula [necula:popl:1997]).

Verifying a simple “compiler” is now often part of masters / doctoral - level courses on using interactive theorem provers, some more complete compiler verifications include

- CompCert C compiler [leroy:cacm:2009],

- LISP compiler [myreen:tphols:2009],

- JIT compiler for LISP [myreen:popl:2010],

- Milawi theorem prover [myreen:itp:2011],

- CakeML compiler [kumar:popl:2014, fox:cpp:2017, tan:icfp:2016], and

- LLVM C compiler [lopes:pldi:2021].

Software security

Both “white-hat” and “black-hat” security engineers analyze binaries to find vulnerabilities and to construct signatures for detecting malware.

Some of the binary analysis tools used are

-

The Mayhem automatic exploit generation tool [cha:sandp:2012] based on CMU’s BAP binary analysis platform [brumley:cav:2011].

-

The angr tool [shoshitaishvili:sp:2016] that uses valgrind to convert binary code to the VEX intermediate representation that is then symbolically executed.

-

The McSema binary lifter that uses the Remill library and IDA Pro to convert (“lift”) binary machine code into LLVM code.

-

The Binsec/Rel relational symbolic execution that checks that binary code is constant time [daniel:sandp:2020].

-

[mycroft:esop:1999] and [noonan:pldi:2016] describe how to decompile machine code to higher-level languages.

-

The Serval symbolic evaluation tool is used to verify machine code microkernels, LLVM code and Linux BPF code [nelson:sosp:2019].

-

[dasgupta:pldi:2020] and [hendrix:itp:2019] describe the generation and checking of binary lifters.

-

[regehr:emsoft:2003, regehr:asplos:2004, regehr:lctes:2006] describe the automatic generation and use of abstract transfer functions to analyze binary programs.

-

[shoshitaishvili:sp:2016] is a recent(ish) survey of offensive techniques.

With the exception of [dasgupta:pldi:2020], none of these currently use formal ISA specifications. However, as the arms race between attackers and defenders hots up, there is an increasing need for the completeness and trustworthiness of a full formal ISA spec.

Verifying software

Last but not least is the use of ISA specifications is to verify software.

- Windows hypervisor code [maus:amast:2008].

- Hoare logic for machine code[myreen:fse:2007].

- Separation logic for machine code [jensen:popl:2013].

- Generating abstract interpreters for machine code [lim:toplas:2013].

- Parts of a operating system [goel:fmcad:2014, goel:phd:2016].

- The SeL4 capability-based microkernel[klein:sosp:2009, sewell:pldi:2013].

- A tiny Arm hypervisor [dam:trusted:2013, baumann:eucnc:2016].

- The Vale tool for writing verified assembly language [bond:usenix:2017].

- Verification in the presence of TLBs[syeda:itp:2018] (see also [simner:pls:2020]).

- A symbolically executable hypervisor [nordholz:eurosys:2020].

- The Isla symbolic execution tool based on SAIL [armstrong:cav:2021].

- The SeKVM hypervisor [li:usenix:2021].

- An Arm hypervisor[tao:sosp:2021].

- Proving LTL properties of binaries [liu:arxiv:2021].

- Generating instruction encoders/decoders [xu:cav:2021].

The opinions expressed are my own views and not my employer's.